DOI: https://doi.org/10.59350/9tp71-zmh06

Guest post by Veronica Herrera, Assistant Professor of Political Science at the University of Connecticut.

In the fall of 2013, I participated in a pilot program for the Qualitative Data Repository at Syracuse University on “active citation” (AC). I was actually producing the revise-and-resubmit (R&R) version of the article on which I was piloting AC—subsequently published in World Development, “Does Commercialization Undermine the Benefits of Decentralization for Local Services Provision? Evidence from Mexico’s Urban Water and Sanitation Sector” (Herrera 2014)—so it was a unique opportunity to write up research while aiming for new transparency practices. Before I started, I was not familiar with the emerging field of research transparency for qualitative researchers. I benefitted from QDR’s “transparency memos,” which were blueprints for researchers on how to approach taking the concepts of “data, analytic and production transparency” (Moravcsik 2014) and applying them to our own work. Below are some of the main things I learned.

Data sharing vs. production transparency vs. analytic transparency. I think there is a lot of confusion about what transparency entails for qualitative researchers. One of the things I learned through this process is that sharing one’s data is just one aspect of transparency. Creating more information for readers about how your data was produced (either by you or someone else – production transparency) and explaining the pathway from data source to analytical claim (analytic transparency) are not the same as sharing some portion of the data. The first two can be done without the third.

Better citations without transparency annotations are possible. What is a “transparency annotation?” Such annotations are similar to really excellent (or “meaty”) footnotes, but are more structured. As a QDR piloteer, it was really nice to get some guidance on what these should look like. Creating them forced me into a particular structure and organization that was very helpful. Although QDR’s guidance on this point has evolved, the current suggestions for engaging in Annotation for Transparent Inquiry (ATI), (which is available here) list the following four components for each annotation anchored to a text segment:

- A full citation to the underlying data source

- An analytic note: discussion that illustrates how the data were generated and how they support the empirical claim or conclusion being annotated in the text;

- A source excerpt: typically 100 to 150 words from a textual source; for handwritten material, audiovisual material, or material generated through interviews or focus groups, an excerpt from the transcription;

- A source excerpt translation: if the excerpt is not in English, a translation of the key passage(s).

Selecting what to be transparent about. Researchers choose which analytical claims need a “transparency annotation” and which do not. Just as authors don’t include a citation for every single sentence in a journal article, and lawyers presenting a case offer only the exhibits they believe will most strongly sway the jury or judge, researchers should and do select the appropriate number and placement of annotations within their text. Typically annotations would be created for the most critical analytical, descriptive or causal claims or for a claim that will be controversial vis-a-vis established literature.

“Fair Use” is your friend: Using supplements as a proxy for data sharing. Fair use in U.S. copyright law allows scholars to include a portion of work that is the intellectual property of someone else in their own work for scholarly purposes, such as comment or analysis. Offering a 50-150-word excerpt from a data source is a safe and easy way to share a small portion of the data in an appendix without infringing on copyright. For sources that are widely available, it will be easy for others to find the source if they are so inclined (for example, for scholarly sources, or publicly available government documents or international development reports).

Data sharing is not all or nothing. Researchers can choose which data to share and which to not share within a single project. Researchers may choose to share a select portion of sources, based on whether or not any one of them is critical to the claims being made and the overall analytic, causal or descriptive inference of the research; and/or based on copyright and human subjects considerations. Moreover, they can share different amounts of data from different data sources – a small segment in the case of one data source, or the whole source in another case. Scholars are only encouraged to share data sources if they can do so within copyright law, while complying with human subjects protections protocols, and without assuming undue burden. In my QDR experience, I shared some of my data but not all of it, and I relied on sharing excerpts frequently under the “fair use” copyright principle.

Data repositories offer differentiated sharing. Sources can be shared in a data repository like QDR, which houses data and provides differentiated data sharing options. Researchers are able to set the data access conditions for each project, or each data source; these options range from making data available to QDR registered users only, making available only data summaries, having secondary users request permission to access certain data files, etc. Different access controls can be placed on different data from the same project.

The Burdens: Time and Effort

Technology. I was among the first researchers to use the software that was also simultaneously being developed, and a number of technical difficulties arose that made the process take longer than it otherwise would have. These are being worked out now and the technological advances in terms of ease of use are fast paced. You can read more about the transitions to an easier-to-use technology for QDR users here, and see a very cool example of the current ATI technology platform using hypothes.is here.

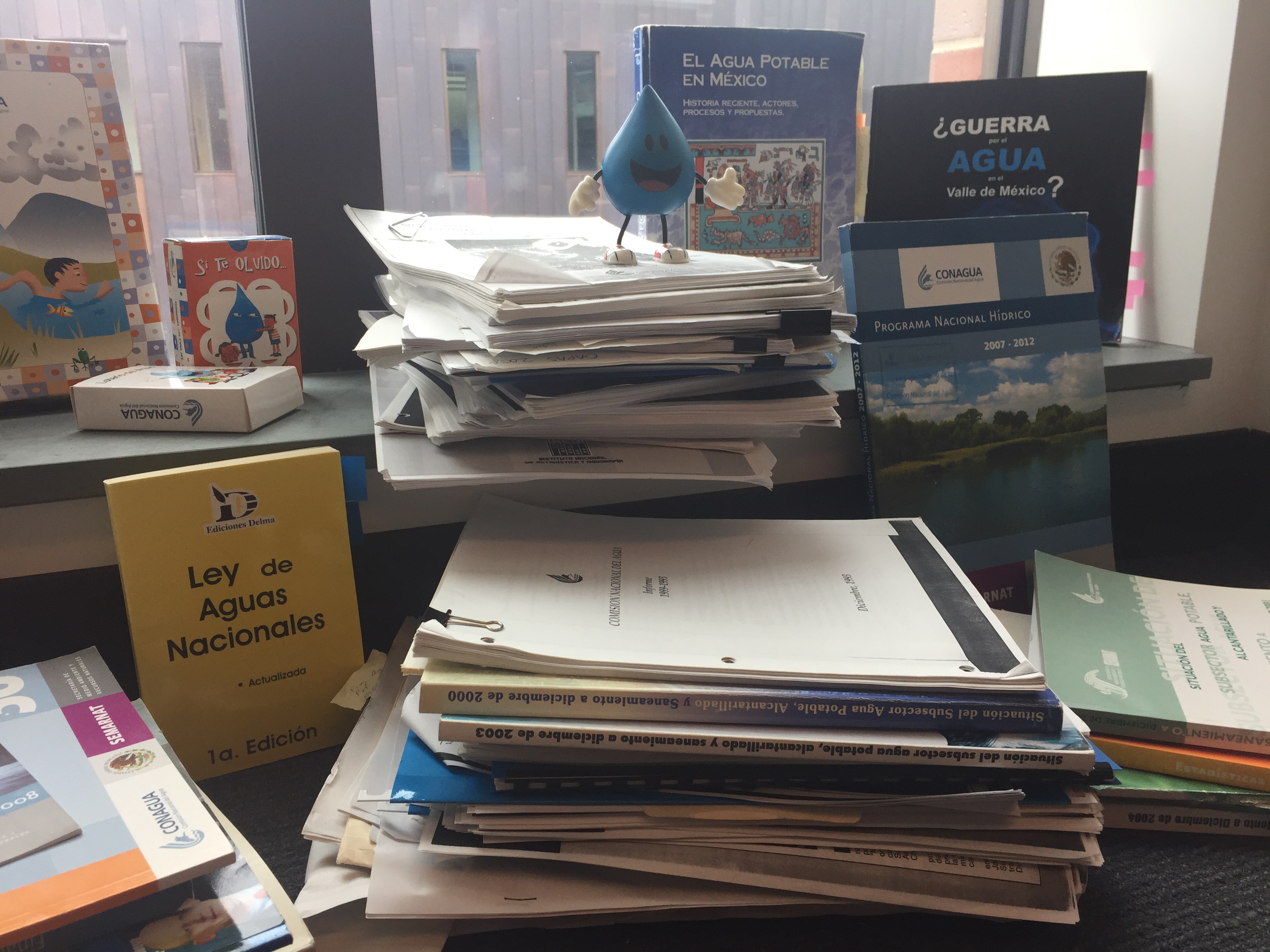

Data organization. Engaging in Active Citation (AC) made me better organize my data sources, in terms of putting all of my sources in one well labeled location, labeling sources clearly and creating an index for my sources. This level of organization was necessary for the AC project even if I didn’t actually share a particular source because I had to have a copy of it or notes about it to be able to create a transparency annotation for it. I quickly realized that if an item wasn’t in my own saved collection, it couldn’t serve as a direct source. That is, a claim could be based on background knowledge and I could note that in a footnote, but I couldn’t cite a source that didn’t exist. This seems obvious, but I think researchers sometimes get confused about what they are citing as background knowledge and what they are citing as a very concrete source and doing a project of this type quickly sorts that out. This retroactive reorganization of my data resulted in a time commitment the first time I did it, but since then I follow a similar pattern of data organization for any new projects, and so it’s now my data organization system.

Logistics and Admin. Coordinating with QDR took time, and I was able to have RA support for small administrative work that was time-consuming but necessary. These tasks included, for example, getting data sources organized and properly labeled, and in some cases scanning or finding sources that I had lost or misplaced initially. These are the types of activities you may do as a researcher to get your project organized anyway, and I now organize my documents in a similar fashion for new projects. So, while there are start up costs, the return is really great.

Translating excerpts was a chore. I translated from the Spanish to English for my source excerpts and that took a while. Moving forward, it seems clear that translating should remain what it is – just a suggestion – because having the source in another language other than English is not against transparency practices per se, but rather not as useful for secondary users who don’t speak that language. This item brings up the question of the value of transparency for different audiences.

Production transparency notes. Noting where I got the data source and/or who gave it to me was not difficult, but did force me to go through interview notes and some audio files a few times. This could be avoided if you keep track of information about data capture strategies during data collection. (Which I now do, believe me!) Nevertheless, this component was the most straightforward and least time consuming for my particular project.

Analytical transparency notes. This was the hardest part, because it forces you to really think through why and how a source is supporting a claim. Researchers will use their own training, epistemology, and research objectives and methodologies here, and there will be a lot of choices made about what to include, and how to make it clear to the reader what your thinking was in a concise way. This process definitely forced me to take out some sources that I had used in the first draft of the article submission as I worked on my R&R. After really thinking through certain claims and sources using the logic of analytical transparency, I realized that some sources no longer fit well. Some sources were illustrative but not representative (when I realized I needed them to be the latter and not the former). Sometimes I realized that my reasoning about using sources was too vague and unclear. So I chose a better source or re-articulated the claim to fit more clearly what the related source was conveying. This process of writing analytical notes while drafting or revising, while difficult, makes our work more rigorous. Doing this for QDR has changed my practices moving forward for the better.

What about background knowledge, context or priors? The AC guidelines I used didn’t offer pointers for incorporating the background knowledge and context that underlie some of my claims. This is something that is of concern to many qualitative researchers, and for good reason. However, researchers being clear in a methodology section or, better yet, in a methodological narrative as an appendix, can clarify their experience, background knowledge and scope of authority that informed the overall project. This type of detailed explanation is incredibly helpful in general and will be useful to researchers evaluating the work. We see this sometimes in books in a way that is particularly helpful (e.g., Abers & Keck, 2006; Hadden, 2015) but less often in journal articles due to word limits. Adopting this approach more widely moving forward, especially in the form of online appendices, when printed word count is limited, would be good practice.

References

Abers, R. N. and Keck, M. E. 2006, “Muddy Waters: The Political Construction of Deliberative River Basin Governance in Brazil.” International Journal of Urban and Regional Research, 30: 601–622. https://doi.org/10.1111/j.1468-2427.2006.00691.x

Hadden, Jennifer. 2015. Networks of Contention: The Divisive Politics of Climate Change. Cambridge University Press. https://doi.org/10.1017/CBO9781316105542

Herrera, Veronica. 2014. “Does Commercialization Undermine the Benefits of Decentralization for Local Services Provision? Evidence from Mexico’s Urban Water and Sanitation Sector.” World Development. 56 (April): 16–31.https://doi.org/10.1016/j.worlddev.2013.10.008

Moravcsik, Andrew. 2014. “Transparency: The Revolution in Qualitative Research.” Symposium: Openness in Political Science. PS: Perspectives on Politics. 47(1): 48–53. https://doi.org/10.1017/S1049096513001789