DOI: https://doi.org/10.59350/1z5vc-nq568

Authors and Reviewers Gear up for November Workshop

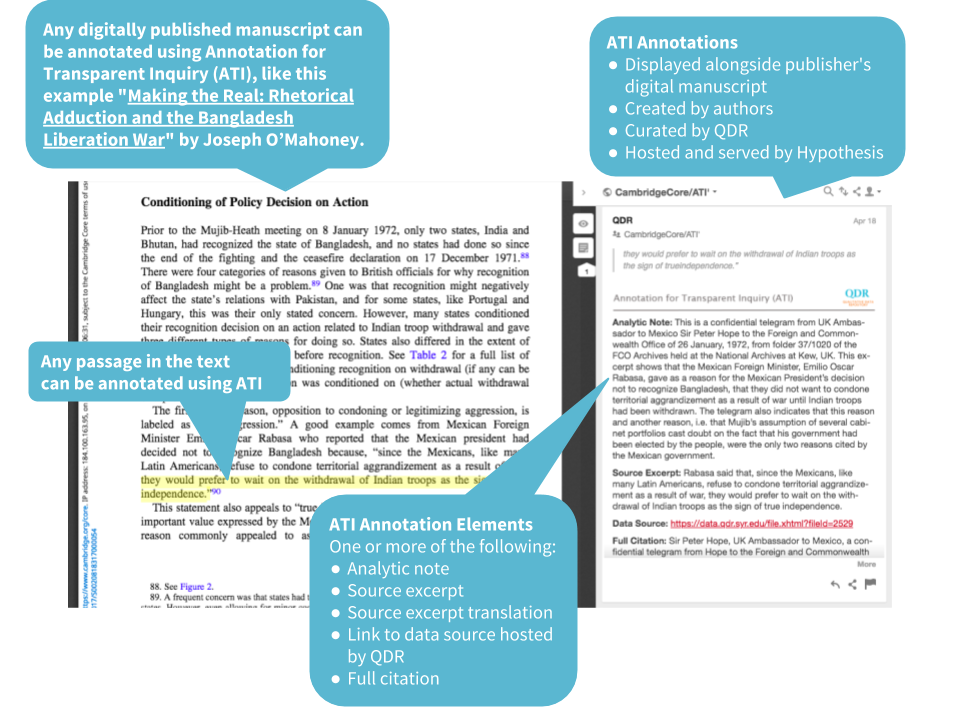

Annotation for Transparent Inquiry (ATI) is a new approach to increasing the openness of qualitative and multi-method research across academic disciplines. In order to develop and promulgate the approach, QDR works with and supports scholars who wish to employ ATI. To date we have held two workshops (both funded by the Robert Wood Johnson Foundation), and we look forward to interacting with more scholars who are interested in using ATI to increase the transparency of their scholarship.

The first ATI workshop, which took place In February 2018, was attended by scholars who used ATI to annotate manuscripts they had previously published. You can read more about the first workshop here and see the articles that were included here.

In April 2018, QDR announced the ATI Challenge, a competition to select a group of authors to use ATI to annotate manuscripts as they are writing them. QDR was overwhelmed with the response to our call: we received 80 proposals from scholars on five continents, spanning a range of disciplines, and on a wide variety of topics We enlisted the help of an interdisciplinary selection committee comprising experienced qualitative and multi-method researchers, and selected 19 proposals for participation in the second workshop of the ATI Initiative.

In contrast with the annotated articles presented at the February workshop, the pieces these scholars are annotating have not yet been accepted for publication and are at various stages of the writing and editing process; some scholars are penning first drafts while others are revising for resubmission, between the completion of a first draft and the edits of to a journal “revise and resubmit.” We are very excited to be working with this extraordinary group of researchers and projects over the next months.

Evaluating ATI

The ATI Initiative aims to provide scholars with the tools to enhance, and increase the transparency of, their scholarship through annotation. Two additional important goals of the project are evaluating the utility of ATI, and facilitating the continued development of tools and processes for using this new approach to openness.

The Initiative’s most important resource for evaluation are reviewers. Each of the manuscripts included in the Initiative is evaluated by a subject-matter expert. First, the reviewer reads the article or manuscript they are evaluating without annotations. During this reading the reviewer is asked to indicate where they expected a claim or a passage to be annotated. Next, the reviewer re-reads the article or manuscript with ATI annotations and evaluates their effectiveness using a rubric created by QDR (.docx). The results of this two-step assessment process help QDR to understand how annotations enhance and increase the transparency of research. The evaluations also address larger questions about how annotations (particularly when they are linked to underlying data sources) affect the consumption of published research. The interaction between reviewer and author(s) is at the center of the ATI workshops.

In addition, we ask authors to keep a detailed “logbook,” documenting their thought process as they annotate, as well as any issues they encounter with the ATI technology and/or instructions (the instructions used for the first workshop are available online). Finally, prior to each workshop authors are asked to complete a survey on the process, promise, and pitfalls of using ATI. Understanding authors’ impressions and reactions is important as we continue to develop ATI. As with any data-sharing and transparency oriented work, reducing the burden ATI places on authors is crucial, given that rewards and incentives for such work are still scarce. (See author Paul Musgrave and Sebastian Karcher’s thoughts on “why ATI” on the Duck of Minerva blog.)

Some Very Preliminary Results from the First ATI Workshop

We have only just begun to analyze the data provided by scholars who participated in the first ATI workshop in February 2018 (and will collect considerably more data from the second workshop, to take place in November). But what better place than a blog to present some highly speculative, very tentative initial findings! We highlight two key preliminary results.

First, one of the most interesting preliminary findings from the first workshop is what participants found challenging about ATI. As part of a questionnaire completed by all authors who participated in the first workshop, we asked which activities - from locating sources to writing annotations - were most challenging. The activity respondents rated most difficult overall was the selection of passages to annotate. Choosing, it turns out, is difficult.

Authors concerns on this dimension were echoed by reviewers, and authors’ choices with regard to which sections of their articles to annotate were a focal point of conversation at the workshop. An a priori rule set on what to annotate has not yet emerged, and indeed may never emerge. Different authors addressing different tasks may well annotate different things. Nevertheless, authors should provide an account of the logic underpinning the choices they make as they decide what (and what not) to annotate.

Second, authors speculated that adding annotations retrospectively was significantly more time consuming and difficult than annotating would have been had they planned for ATI from the outset. (Of course, since they did not have the comparative experience of annotating while writing, we’ll reiterate that authors were speculating!) Even the second workshop won’t include researchers whose data management was oriented towards ATI from the start; while participants in that workshop are annotating as they are writing, for the most part their data collection preceded their participation in the ATI Initiative. Nonetheless, through comparing data garnered from participants in the first and second workshop, we should gain some insights on whether annotating earlier in the writing process seems to reduce the burden on researchers.

Using ATI

You do not have to be part of the ATI Initiative to annotate your work using ATI. Any researcher can use ATI, and we encourage you to do so! The instructions we have written for using ATI focus on using simple, familiar tools, with QDR curators performing the conversion into ATI’s web annotation format. Contact us at qdr@syr.edu if you are interested in learning more!